728x90

Mission3

미션3에서는 미션2에서 한국음식 데이터를 통해 만든 모델에 전이학습을 시켜서 한국 건강식을 분류하는 모델을 만들어 보았다.

import numpy as np

import matplotlib.pyplot as plt

import torch

import torch.nn as nn

import torch.nn.functional as F

from torch import FloatTensor as FT

from torch import LongTensor as LT

from torch.autograd import Variable

from torchvision import datasets, transforms, models

from PIL import Image

from tqdm import tqdm

from torch.utils.data import DataLoader, TensorDataset

import os

import torch.optim as optim

import copy

from torchsummary import summary

from sklearn.metrics import f1_score

import matplotlib.pyplot as plt

# GPU 올리기

device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')

print(device)

!unzip -qq drive/MyDrive/데이터셋.zip

!mkdir -p /content/health_train

!mkdir -p /content/health_val

!unzip -qq kfood_health_train.zip -d /content/health_train

!unzip -qq kfood_health_val.zip -d /content/health_val

# Upload Pretrained Model

model = models.resnet50(pretrained=False)

model.fc = torch.nn.Linear(2048,42)

model.load_state_dict(torch.load('/content/drive/MyDrive/Colab Notebooks/제출폴더/mission2.pt'))

model.to(device)

# Layer의 weight gradient Freezing

for param in model.parameters():

param.requires_grad = False

# layer4의 weight gradient Freezing 해제

for param in model.layer4.parameters():

param.requires_grad = True

# 마지막 fc Layer 변경 : health_train의 cls가 13개이므로 이에 맞춰서 변경

num_in = model.fc.in_features

model.fc = nn.Linear(num_in, 13).to(device) # 자동으로 requires_grad => True

#train

train_loader = '/content/health_train'

valid_loader = '/content/health_val'

mean = [0.5, 0.5, 0.5]

std = [0.5, 0.5, 0.5]

transform_train = transforms.Compose([

transforms.Resize((224,244)),

transforms.ToTensor(),

transforms.Normalize(mean, std)

])

transform_valid = transforms.Compose([

transforms.Resize((224,224)),

transforms.ToTensor(),

transforms.Normalize(mean, std)

])

dataset_train = datasets.ImageFolder(root=train_loader, transform=transform_train)

dataset_train_loader = torch.utils.data.DataLoader(dataset_train, batch_size=32, shuffle=True, num_workers=os.cpu_count())

valid_train = datasets.ImageFolder(root=valid_loader, transform=transform_valid)

valid_train_loader = torch.utils.data.DataLoader(valid_train, batch_size=32, shuffle=False, num_workers=os.cpu_count())

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(model.parameters(), lr=0.00001)

# Acc/Loss Graph 생성을 위한 변수

train_loss_history = []

val_loss_history = []

train_acc_history = []

val_acc_history = []

def train(model, criterion, optimizer, num_epochs):

best_model_wts = copy.deepcopy(model.state_dict())

best_acc = 0.0

for epoch in range(num_epochs):

model.train()

print(f'Epoch {epoch}/{num_epochs - 1}')

print('-' * 10)

running_loss = 0.0

running_corrects = 0

# tqdm을 데이터 로더에 적용하여 진행 막대를 표시

for inputs, labels in tqdm(dataset_train_loader, desc="Training", leave=False): # desc는 진행 막대의 설명, leave는 막대가 완료된 후에도 화면에 남기지 않도록 설정

inputs = inputs.to(device)

labels = labels.to(device)

outputs = model(inputs)

loss = criterion(outputs, labels)

# zero the parameter gradients

optimizer.zero_grad()

loss.backward()

optimizer.step()

# statistics

_, preds = torch.max(outputs, 1)

running_loss += loss.item() * inputs.size(0)

running_corrects += torch.sum(preds == labels.data)

epoch_loss = running_loss / len(dataset_train)

epoch_acc = running_corrects.double() / len(dataset_train)

train_loss_history.append(epoch_loss)

train_acc_history.append(epoch_acc.item())

# Validation phase

val_loss, val_accuracy = evaluate_model(model, criterion)

val_loss_history.append(val_loss)

val_acc_history.append(val_accuracy)

print(f'Train Loss: {epoch_loss:.4f} Acc: {epoch_acc:.4f}')

print(f'Val Loss: {val_loss:.4f} Acc: {val_accuracy:.4f}')

# Save the best model

if val_accuracy > best_acc:

best_acc = val_accuracy

best_model_wts = copy.deepcopy(model.state_dict())

print(f'Best Validation Accuracy: {best_acc:.4f}')

model.load_state_dict(best_model_wts)

return model

# For evaluating the model during training

def evaluate_model(model, criterion):

model.eval()

total_loss = 0.0

total_corrects = 0

with torch.no_grad():

for inputs, labels in valid_train_loader:

inputs, labels = inputs.to(device), labels.to(device)

outputs = model(inputs)

loss = criterion(outputs, labels)

_, preds = torch.max(outputs, 1)

total_loss += loss.item() * inputs.size(0)

total_corrects += torch.sum(preds == labels.data)

accuracy = total_corrects.double() / len(valid_train)

avg_loss = total_loss / len(valid_train)

return avg_loss, accuracy.item()

def plot_graphs(train_loss_history, val_loss_history, train_acc_history, val_acc_history):

plt.figure(figsize=(12, 4))

plt.subplot(1, 2, 1)

plt.plot(train_loss_history, label='Train Loss')

plt.plot(val_loss_history, label='Validation Loss')

plt.xlabel('Epochs')

plt.ylabel('Loss')

plt.legend()

plt.subplot(1, 2, 2)

plt.plot(train_acc_history, label='Train Accuracy')

plt.plot(val_acc_history, label='Validation Accuracy')

plt.xlabel('Epochs')

plt.ylabel('Accuracy')

plt.legend()

plt.tight_layout()

# 그래프 이미지를 저장

plt.savefig('/content/drive/MyDrive/Mission3_Graph.png')

# 모델 로드

pt_model = models.resnet50()

pt_model.fc = nn.Linear(pt_model.fc.in_features, 13)

checkpoint = torch.load("/content/drive/MyDrive/Colab Notebooks/제출폴더/mission3.pt")

pt_model.load_state_dict(checkpoint)

pt_model = pt_model.to(device)

#train

valid_loader = '/content/health_val'

mean = [0.5, 0.5, 0.5]

std = [0.5, 0.5, 0.5]

transform_valid = transforms.Compose([

transforms.Resize((224,224)),

transforms.ToTensor(),

transforms.Normalize(mean, std)

])

valid_train = datasets.ImageFolder(root=valid_loader, transform=transform_valid)

valid_train_loader = torch.utils.data.DataLoader(valid_train, batch_size=32, shuffle=False, num_workers=os.cpu_count())

import os

from tqdm.notebook import tqdm

from warnings import filterwarnings

filterwarnings('ignore')

import torch

from torch import nn, optim

from torch.utils.data.dataloader import DataLoader

from torch.utils.data import random_split

from torch.utils.tensorboard import SummaryWriter

from torchvision import models

from torchvision import transforms as T

from torchvision.datasets import ImageFolder

from torch.backends import cudnn

cudnn.benchmark = True

# 모델을 사용 가능한 디바이스로 이동

pt_model = pt_model.to(device)

y_valid = []

prediction = []

def validate_epoch(model: nn.Module, data_loader: DataLoader, device: torch.device):

model.eval()

accuracies = []

for images, labels in tqdm(data_loader, total=len(data_loader), mininterval=1, desc='measuring accuracy'):

images = images.to(device)

labels = labels.to(device)

logits = model(images)

pred = torch.argmax(logits, dim=1)

# 정답 Label과 모델이 예측한 Label 저장

y_valid.extend(labels.tolist())

prediction.extend(pred.tolist())

accuracies.append(pred == labels)

accuracy = torch.concat(accuracies).float().mean() * 100

return accuracy.item()

print(f"Validation Accuracy: {validate_epoch(pt_model, valid_train_loader, device):.2f}")

import matplotlib.pyplot as plt

import matplotlib.font_manager as fm

plt.rc('font', family='NanumBarunGothic') # 사용할 폰트 지정 : 나눔바른고딕

# 나눔 고딕체 사용

font_path = font_path = '/usr/share/fonts/truetype/nanum/NanumGothic.ttf'

font_name = fm.FontProperties(fname=font_path).get_name()

plt.rc('font', family=font_name)

from sklearn.metrics import confusion_matrix

import matplotlib.pyplot as plt

import seaborn as sns

classes = valid_train.classes

# valid_dataset에 대하여 정답 Label과 예측 Label을 이용해 Confusion Matrix 생성

confusion = confusion_matrix(y_valid, prediction)

# 시각화

plt.figure(figsize=(12, 12))

sns.heatmap(confusion, annot=True, fmt='d', cmap='Blues', xticklabels=classes, yticklabels=classes)

plt.xlabel('Predicted')

plt.ylabel('Actual')

plt.title('Confusion Matrix')

plt.show()

from sklearn.metrics import f1_score

import matplotlib.pyplot as plt

# 각 클래스 별 F1 Score 계산

def calculate_f1_scores(model, data_loader, device):

model.eval()

f1_scores = []

for class_num in range(13): # 0부터 12까지의 클래스에 대해 반복

true_labels = []

predicted_labels = []

for images, labels in data_loader:

images = images.to(device)

labels = labels.to(device)

logits = model(images)

pred = torch.argmax(logits, dim=1)

# labels(정답)이 각 클래스와 동일한 이미지에 대해서 예측한 결과를 저장

true_labels.extend((labels == class_num).cpu().numpy())

predicted_labels.extend((pred == class_num).cpu().numpy())

# f1 score를 계산하여 f1_scores 배열에 저장

f1 = f1_score(true_labels, predicted_labels)

f1_scores.append(f1)

print("cls_f1_scores: ", f1_scores)

return f1_scores

f1_scores = calculate_f1_scores(pt_model, valid_train_loader, device)

class_names = [ '가리비', '갈비찜', '고등어', '김치국', '낚지볶음', '돼지갈비찜', '된장찌개', '떡국', '모듬초밥', '배추김치', '부대찌개',

'순대', '오리로스구이']

# 상위 3개와 하위 3개의 인덱스를 찾습니다.

top_3_indices = sorted(range(len(f1_scores)), key=lambda i: f1_scores[i], reverse=True)[:3]

bottom_3_indices = sorted(range(len(f1_scores)), key=lambda i: f1_scores[i])[:3]

# 색상을 지정합니다.

colors = ['#ff9999' if i in top_3_indices else '#99e699' if i in bottom_3_indices else '#99b3ff' for i in range(len(f1_scores))]

# 그래프를 생성합니다.

plt.figure(figsize=(12, 10))

bars = plt.barh(class_names, f1_scores, color=colors)

# 상위 3개 및 하위 3개의 f1_score 값을 태깅합니다.

for i, (bar, score) in enumerate(zip(bars, f1_scores)):

if i in top_3_indices or i in bottom_3_indices:

plt.text(score - 0.02, i, f'{score:.2f}', ha='center', va='center', color='black', fontsize=11, fontweight='bold')

# 상위 5개 및 하위 5개의 라벨을 강조하기 위해 bold처리합니다.

yticks = plt.yticks()[0]

yticklabels = plt.gca().get_yticklabels()

for idx, label in enumerate(yticklabels):

if idx in top_3_indices or idx in bottom_3_indices:

label.set_fontweight('bold')

plt.xlabel('F1 Score')

plt.title('상하위 3개 강조 F1 Score')

plt.tight_layout()

plt.show()

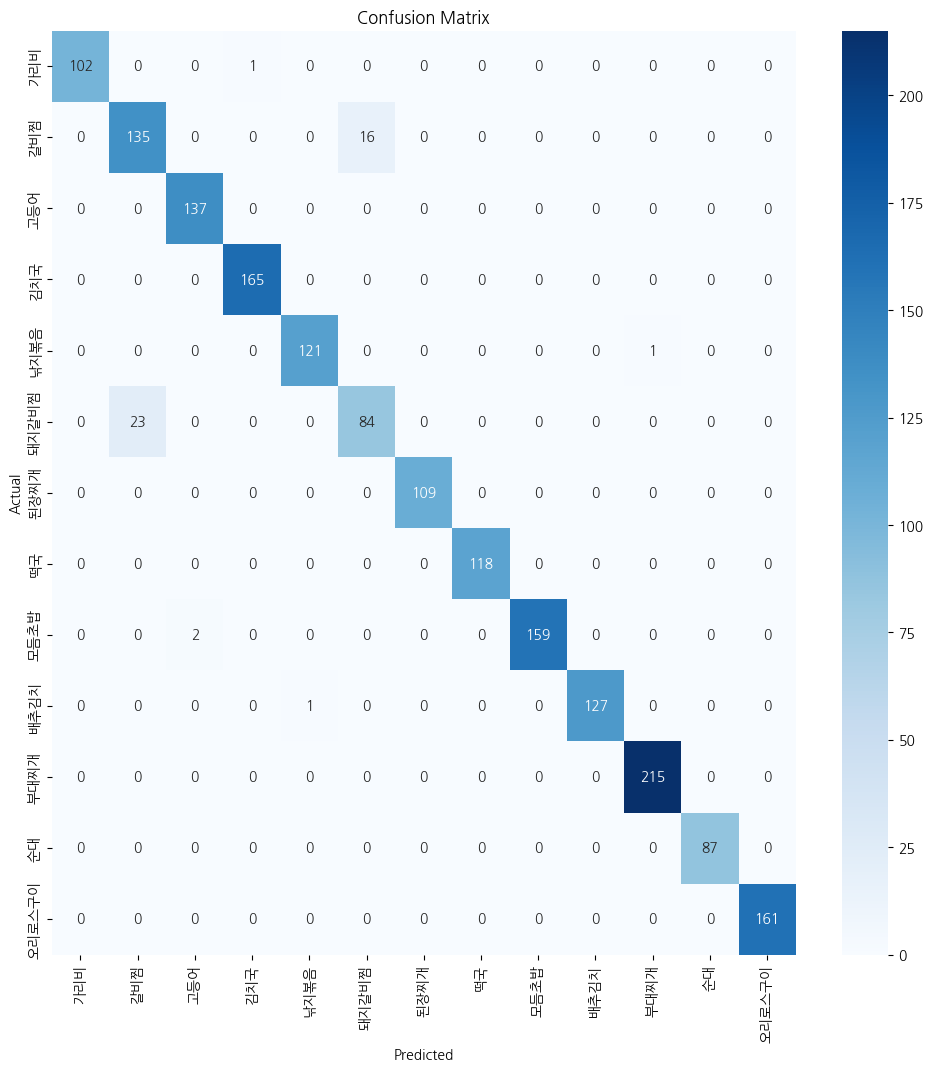

전이학습을 진행하니 정확도가 많이 올라가서 97%이상으로 오른것을 볼 수 있다. 아래와 같이 분석도 해보았다.

Confusion matrix로 확인을 해보니 돼지갈비찜과 갈비찜을 구별하는데 어려움을 갖는것을 볼 수 있다. 그러나 이것은 사람이 봐도 구별하기 어려울정도로 모호한 데이터였기 때문에 거의 완벽하게 정답을 맞춰낸다는 것을 발견했다.

728x90

'Additional Study > Contest' 카테고리의 다른 글

| DCC 수상 후기 (2) | 2023.12.11 |

|---|---|

| 대구 교통사고 피해 예측 AI 경진대회 - (1) (3) | 2023.11.19 |

| DCC 한국음식 분류 모델 (0) | 2023.10.29 |

| DCC Normalization (0) | 2023.10.29 |

| DCC 클래스별 시각화 (0) | 2023.10.13 |